Artificial Intelligence vs. Academic Integrity

Artificial Intelligence (AI) is a tool that has become increasingly prevalent in the education system. A trail of controversies follow it including the notion that AI slowly eliminates the necessity for acquiring knowledge—a scary acknowledgment for those working in the field of education. AI’s adaptive technology continues to threaten learning environments with no slowing down thus far.

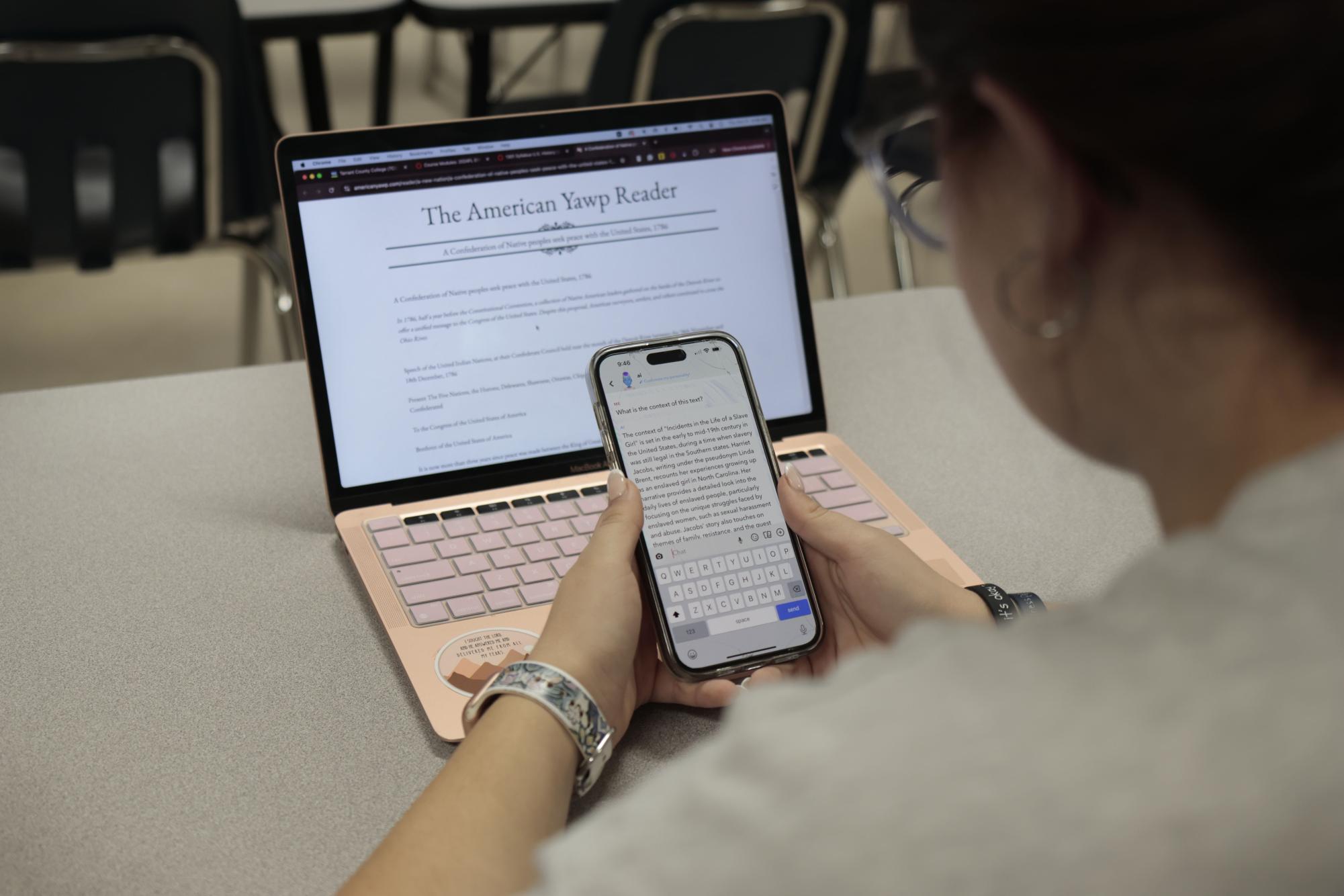

According to research conducted by Internet Matters, four in 10 children attest to using generative AI, with half being 13-14-year-olds. Additionally, a survey estimates that 54% of students who engage with AI use it to complete schoolwork. A lack of guidance from the Department of Education leaves both teachers and parents questioning the rising dominance of generative AI.

Not to mention, over-dependence on Artificial Intelligence in the classroom has proved a hindrance to students’ development of vital skills like critical thinking and logical reasoning. MobileGuardian notes although applying AI to homework may get the job done faster, it completely defeats the purpose of the homework: to practice the skills a student learns in class. Ms. Danielle Panzarella, a social studies teacher, thinks much the same.

“I think we have to set very careful limits on what is allowed and what would be considered plagiarism/cheating when using AI in class,” Ms. Panzarella said. “However, I would disagree entirely with students using [Google] AI summaries as the answers to their questions and would not want to see those summaries copied and pasted into the material that students turn in for a grade–mostly because they have not taken the time to verify or think critically about the answer they were given.”

An opinion piece by theNEXUS discusses a study by Dr. Ahmed, an assistant professor in engineering management. The study found that, out of 285 students, 68.9% of laziness and 27.7% of the loss of decision-making skills stemmed from artificial intelligence use. Overuse of AI creates a cycle of dependence that leaves students lacking key cognitive processes.

“I don’t think it is any one specific cause, but certainly a combination of these factors. On top of these two, many students have a lot of demands on their time these days,” Ms. Panzarella said. “When we get overwhelmed, it’s tempting to look for ways to cut corners and save ourselves some time. I think most students who use AI do so because they don’t feel like they have the time needed to engage with the material, but they also don’t want to see their grades suffer if they turn something in late (or not at all).”

Walden University notes both benefits and disadvantages of AI, particularly that it helps speed up previously time-consuming processes like reaching out to a mentor that may be unavailable, but falls short in that AI is known to perpetuate isolation because students depend more on their AI generator than the actual teacher of their content. Another of Legacy’s social studies teachers, Ms. Abbigayle Marion, views AI from a similar perspective.

Do you use AI for school assignments?

Sorry, there was an error loading this poll.

“I believe that, as with anything, there is a time and place. AI should not be a substitute for human intelligence but as an addition to,” Ms. Marion said. “Students, particularly teenagers, are often tempted to replace their knowledge, skill practice or critical thinking because it is far easier to utilize computers. This is dangerous, and why we see pushback from teachers.”

As of now, Artificial Intelligence technology is in its early stages, and students that use it to complete their assignments will only improve at remaining under the radar of their educators. An opinion piece by The Washington Post discussed a study in which college students and professors were asked to estimate the percentage of students who use AI to cheat. The results provided that students assumed 60% of their peers used AI, and professors guessed at least 40% of their students used AI to complete classwork. These estimates, in the grand scheme of things, are high—concerningly so.

“Unfortunately, the scary part of AI is we have now found a way to substitute student knowledge and skill base with computers, and this is where I am worried as they enter college and the adult world,” Ms. Marion said. “Even if you may never use AP World History in the real world, critical thinking, argumentation, use of evidence and understanding cause and effect are all very real adult skills that cannot be recreated—thoroughly—by a computer.”

The consequences of getting caught cheating with AI are nothing to scoff at, either. Ameer Khatri Consulting discusses how educational institutions consider academic dishonesty a serious offense, which can result in penalties such as failing grades, academic probation or even expulsion. Students must decide if these repercussions are worth one essay grade. Kamryn Ross, 12, reflects on the ramifications of cheating using AI and avoided it altogether.

“My view on AI has remained pretty constant as a result of teacher warnings and threats of consequences,” Ross said. “I view AI as a tool that has dangers associated with it, so I understand the consequences that come with using AI in the classroom in a way that would foster cheating or plagiarism.”

A prime principle of education is that of academic integrity, which according to the University of Wollington, allows students and staff the liberty to craft new ideas, knowledge and creative works while still respecting and acknowledging the work of others. This thought process is fundamental to learning, teaching and research.

“If [students] wrote it themselves, they should be able to easily explain their thoughts. If artificial intelligence wrote it for them, [students] will probably have trouble clarifying their main ideas because they didn’t actually take the time to think through them,” Ms. Panzarella said. “Value knowledge enough to find the answer for yourself, and value yourself enough to know your thoughts are worth sharing.”

Artificial intelligence (AI) is revolutionizing the educational landscape, bringing transformative benefits but also raising ethical concerns, particularly around academic integrity. AI tools like ChatGPT, Grammarly, and specialized educational platforms have become powerful resources for students and educators. However, these tools also raise questions about the boundary between legitimate help and academic dishonesty. This struggle to balance academic integrity with the advantages AI offers is one that educators, students, and institutions are navigating with increasing urgency.

The adoption of AI in education is reshaping how students learn, teachers instruct, and institutions manage academic processes. AI-driven tools help personalize learning, provide feedback, and support student development. Adaptive learning platforms tailor educational content to individual needs, while language models like ChatGPT can assist students with ideas, draft writing, and even explain complex concepts.

These benefits are valuable, particularly in remote learning environments where students might feel isolated from direct interaction with instructors. AI also aids teachers, assisting them in grading, providing resources, and even generating curriculum ideas. Despite these advantages, the powerful capabilities of AI also present new avenues for academic dishonesty.

AI’s potential misuse in the classroom primarily centers around plagiarism, unauthorized collaboration, and dependence on AI-generated work. Students can use AI tools to generate essays, answer assignments, or find solutions to complex problems, sometimes bypassing the original intent of their assignments.

For instance, when students use AI to generate essays or solve equations, they might not fully engage in the learning process, thus limiting their understanding and development. As a result, teachers may face challenges assessing whether students genuinely comprehend material, as AI-generated content can closely resemble a student’s work, making it difficult to distinguish between original and AI-assisted contributions.

Educators are finding it increasingly challenging to uphold academic integrity while still encouraging students to use beneficial AI tools. Institutions are developing policies, but implementing and enforcing these policies requires more than punitive measures—it involves clear guidelines and proactive communication about AI’s appropriate use.

However, staying updated on the evolving capabilities of AI technologies is difficult. Tools for detecting AI-generated content are emerging, but these are often limited in accuracy and may not be able to keep pace with rapidly advancing AI. Educators must also consider fairness, as reliance on detection tools alone could result in false accusations or a lack of understanding of legitimate uses of AI.

Educational institutions play a vital role in fostering a culture of integrity that adapts to technological advancements. Policies that balance access to AI with academic expectations are necessary to guide students and teachers. Some schools have already started to embrace guidelines around AI, similar to policies on acceptable sources for research or tools like calculators.

Institutions can also implement educational programs to inform students about the ethical implications of using AI. Academic integrity policies should include clear definitions of what constitutes appropriate versus inappropriate use of AI, helping students make informed decisions.

To reconcile academic integrity with AI’s transformative potential, a proactive, multifaceted approach is essential: 1. Clear Guidelines: Institutions should clearly define acceptable AI use and communicate these policies effectively to students and faculty. 2. AI Literacy Education: Both students and teachers should be educated about AI’s capabilities, ethical considerations, and responsible use. This may include courses, seminars, or integrated curriculum elements. 3. Promoting Accountability: Educators can design assignments that encourage original thinking and creativity, making it more challenging for students to rely solely on AI. 4. Improved Detection Tools: Schools should continue to explore AI-detection tools, with a focus on developing ones that distinguish between assistance and plagiarism. 5. Encouraging Collaboration: A collaborative dialogue between students, teachers, and administrators can help address concerns and clarify expectations around AI use in education.

The balance between academic integrity and the beneficial use of AI is a pressing challenge for modern education. By fostering a culture of integrity, providing guidance on appropriate AI use, and developing fair policies, educational institutions can navigate this complex landscape effectively. The goal is not to eliminate AI from the classroom but to ensure it supports genuine learning and intellectual growth, upholding the fundamental principles of academic integrity.

Did you notice a difference between the articles? Well, the first thing I see is the lack of people; AI lacks people. The first article takes into account the voices of the educators being affected, and how they view the direct impacts of artificial intelligence in their classrooms. The AI article simply tells you how every teacher “feels” by generalizing a simple consensus. Would you have known that the teachers at Legacy don’t utterly despise AI, but see it as a valuable resource with a time and place for its application? AI wouldn’t have told you that—in fact, it didn’t.

If there’s anything at all you should’ve taken away from this, it’s that AI is not a voice for the people. AI does not feel, AI does not hope, and AI doesn’t dream of a better future for the field of education. It thinks and acts, but not like a human, and should not be seen as a replacement for a student or educator.

Your donation will support the student journalists of Mansfield Legacy High School. Your contribution will allow us to purchase equipment and cover our annual website hosting costs and travel to media workshops.

Antoinette Dick • Jul 20, 2025 at 11:02 am

The articles are very interesting , there are a lot of good points as far plagerisum and students not doing there work correctly. Even though many students are using AI (Artificial Intelligence) to complete there assignments. I strongly believe “what was everyone expecting”. AI is here to stay. With all this technology comes great responsibility. With the guidance of teachers, parents and the students themselves.

Honesty is the best policy. We can teach our children to do the right thing, but they will do there own thing.

Antoinette Dick • Jul 20, 2025 at 10:43 am

I am not completely against AI let alone technology, I how ever as a mother am blessed both my kids have done things correctly when it come to there education. my husband and myself let them no these tool are okay to use as guidance but you have to use your mind and think for yourself.

Jared • Oct 31, 2024 at 11:11 am

I think AI will definitely have some positive uses in education at some point. The main benefit is that you can ask any question no matter how specific, and the AI will try to answer it. That can be very useful for individual learning and trying to formulate an argument into words. Overall, I guess there is a lot of “user discretion” regarding how good AI is to have.

Miranda Cervantes • Oct 31, 2024 at 11:03 am

I think that all the information that is given is really good and interesting.